-

摘要:

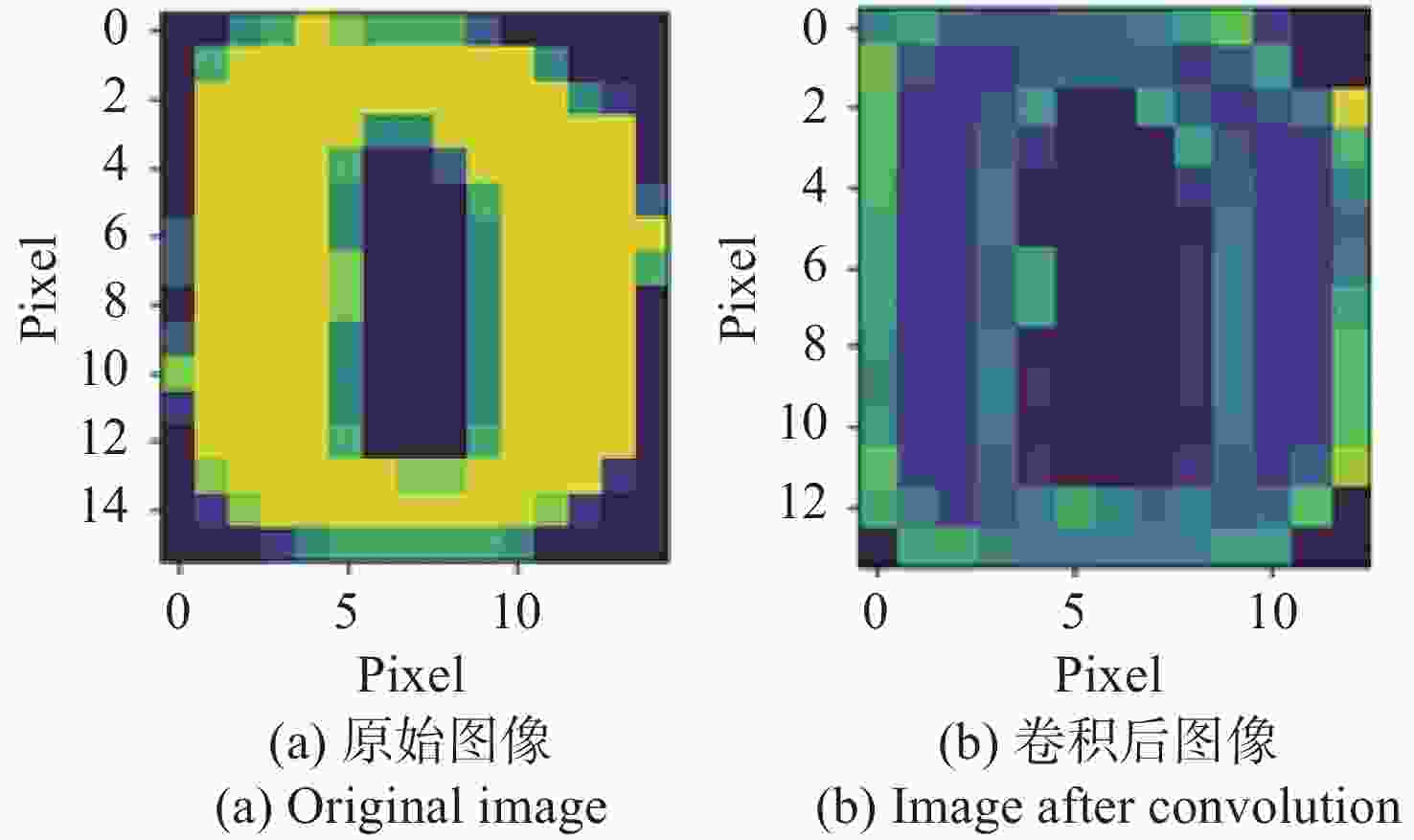

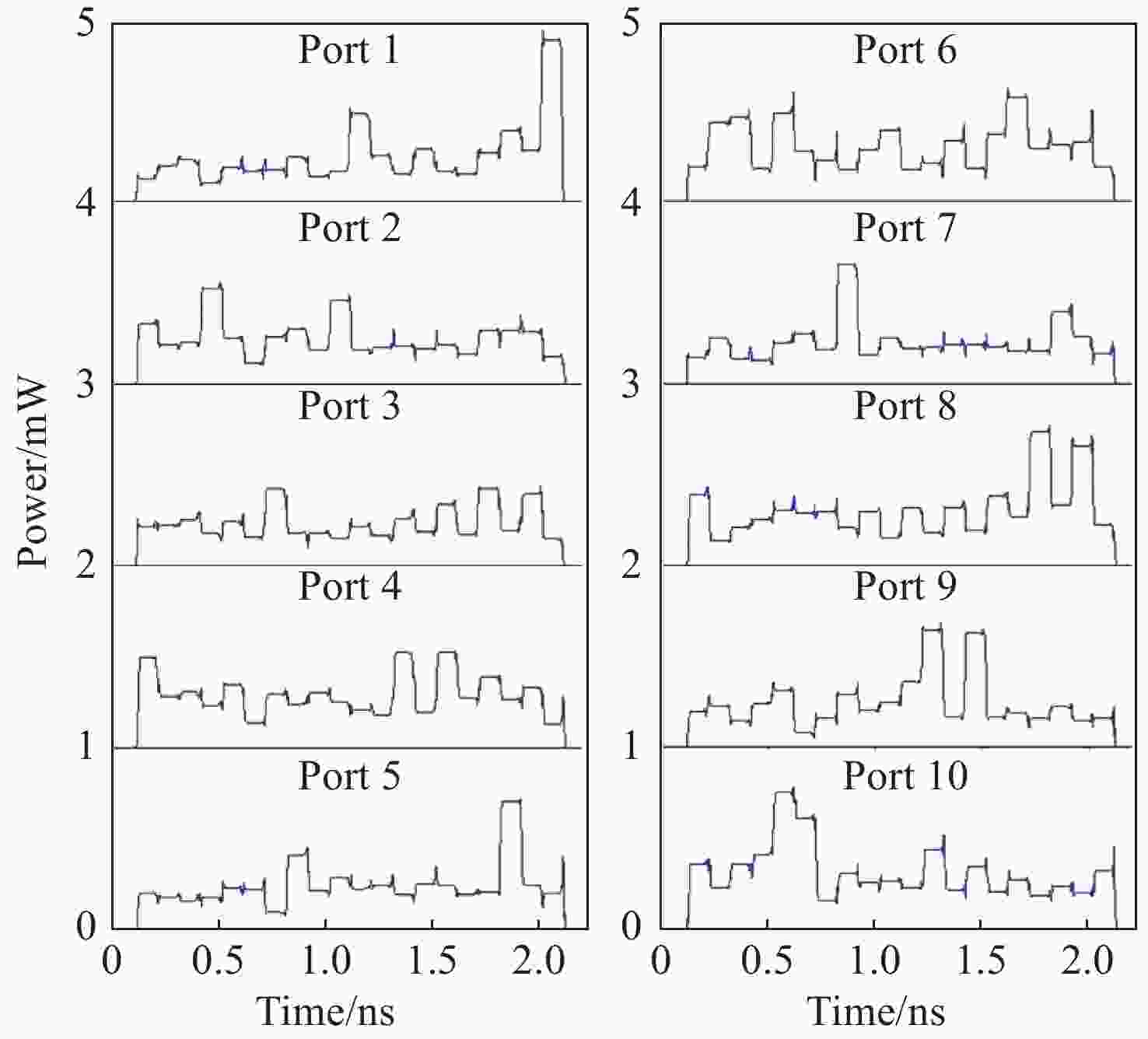

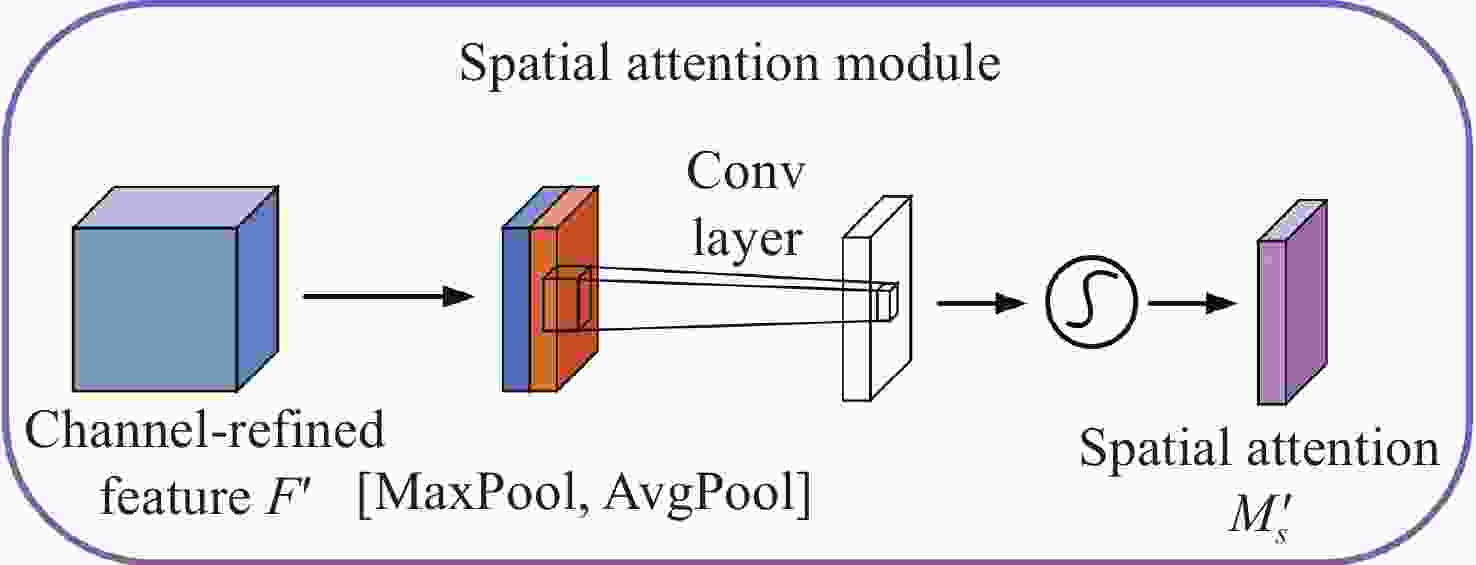

当前光子神经网络的研究主要集中在单一模态网络的性能提升上,而缺少对多模态信息处理的研究。与单一模态网络相比,多模态学习可以利用不同模态信息之间的互补性,因此,多模态学习可以使得模型学习到的表示更加完备。本文提出了将光子神经网络和多模态融合技术相结合的方法。首先,利用光子卷积神经网络和光子人工神经网络相结合构建异构光子神经网络,并通过异构光子神经网络处理多模态数据。其次,在融合阶段通过引入注意力机制提升融合效果,最终提高任务分类的准确率。在多模态手写数字数据集分类任务上,使用拼接方法融合的异构光子神经网络的分类准确率为95.75%;引入注意力机制融合的异构光子神经网络的分类准确率为98.31%,并且优于当前众多先进单一模态的光子神经网络。结果显示:与电子异构神经网络相比,该模型训练速度提升了1.7倍。与单一模态的光子神经网络模型相比,异构光子神经网络可以使得模型学习到的表示更加完备,从而有效地提高多模态手写数字数据集分类的准确率。

Abstract:Current study on photonic neural networks mainly focuses on improving the performance of single-modal networks, while study on multimodal information processing is lacking. Compared with single-modal networks, multimodal learning utilizes complementary information between modalities. Therefore, multimodal learning can make the representation learned by the model more complete. In this paper, we propose a method that combines photonic neural networks and multimodal fusion techniques. First, a heterogeneous photonic neural network is constructed by combining a photonic convolutional neural network and a photonic artificial neural network, and multimodal data are processed by the heterogeneous photonic neural network. Second, the fusion performance is enhanced by introducing attention mechanism in the fusion stage. Ultimately, the accuracy of task classification is improved. In the MNIST dataset of handwritten digits classification task, the classification accuracy of the heterogeneous photonic neural network fused by the splicing method is 95.75%; the heterogeneous photonic neural network fused by introducing the attention mechanism is classified with an accuracy of 98.31%, which is better than many current advanced single-modal photonic neural networks. Compared with the electronic heterogeneous neural network, the training speed of the model is improved by 1.7 times; compared with the single-modality photonic neural network model, the heterogeneous photonic neural network can make the representation learned by the model more complete, thus effectively improving the classification accuracy of MNIST dataset of handwritten digits.

-

Key words:

- photonic neural network /

- multimodal /

- attention mechanism

-

表 1 拼接融合的异构电子神经网络训练各部分时间占比

Table 1. Time share of each part of training for heterogeneous electronic neural networks with splicing and fusion

正向传播 反向传播 参数更新时间 总时间 时间/s 6.39 7.58 1.19 15.16 占比/% 42.14 50.00 7.86 100.00 表 2 基于注意力机制融合的异构电子神经网络训练各部分时间占比

Table 2. Time share of each part of training of heterogeneous electronic neural networks based on the fusion of attention mechanisms

正向传播 反向传播 参数更新时间 总时间 时间/s 6.53 8.18 1.09 15.80 占比/% 41.33 51.75 6.92 100.00 表 3 先进方法分类结果对比表

Table 3. Comparison of classification results of advanced methods

-

[1] 王惠琴, 侯文斌, 黄瑞, 等. 基于深度学习的空间脉冲位置调制多分类检测器[J]. 中国光学,2023,16(2):415-424. doi: 10.37188/CO.2022-0106WANG H Q, HOU W B, HUANG R, et al. Spatial pulse position modulation multi-classification detector based on deep learning[J]. Chinese Optics, 2023, 16(2): 415-424. (in Chinese) doi: 10.37188/CO.2022-0106 [2] 姜林奇, 宁春玉, 余海涛. 基于多尺度特征与通道特征融合的脑肿瘤良恶性分类模型[J]. 中国光学,2022,15(6):1339-1349. doi: 10.37188/CO.2022-0067JIANG L Q, NING CH Y, YU H T, et al. Classification model based on fusion of multi-scale feature and channel feature for benign and malignant brain tumors[J]. Chinese Optics, 2022, 15(6): 1339-1349. (in Chinese) doi: 10.37188/CO.2022-0067 [3] 李冠楠, 石俊凯, 陈晓梅, 等. 基于机器学习的过焦扫描显微测量方法研究[J]. 中国光学,2022,15(4):703-711. doi: 10.37188/CO.2022-0009LI G N, SHI J K, CHEN X M, et al. Through-focus scanning optical microscopy measurement based on machine learning[J]. Chinese Optics, 2022, 15(4): 703-711. (in Chinese) doi: 10.37188/CO.2022-0009 [4] 肖树林, 胡长虹, 高路尧, 等. 像元映射变分辨率光谱成像重构[J]. 中国光学,2022,15(5):1045-1054. doi: 10.37188/CO.2022-0108XIAO SH L, HU CH H, GAO L Y, et al. Pixel mapping variable-resolution spectral imaging reconstruction[J]. Chinese Optics, 2022, 15(5): 1045-1054. (in Chinese) doi: 10.37188/CO.2022-0108 [5] MARKRAM H, MULLER E, RAMASWAMY S, et al. Reconstruction and simulation of neocortical microcircuitry[J]. Cell, 2015, 163(2): 456-492. doi: 10.1016/j.cell.2015.09.029 [6] GOODMAN J W, DIAS A R, WOODY L M. Fully parallel, high-speed incoherent optical method for performing discrete Fourier transforms[J]. Optics Letters, 1978, 2(1): 1-3. doi: 10.1364/OL.2.000001 [7] RECK M, ZEILINGER A, BERNSTEIN H J, et al. Experimental realization of any discrete unitary operator[J]. Physical Review Letters, 1994, 73(1): 58-61. doi: 10.1103/PhysRevLett.73.58 [8] CLEMENTS W R, HUMPHREYS P C, METCALF B J, et al. Optimal design for universal multiport interferometers[J]. Optica, 2016, 3(12): 1460-1465. doi: 10.1364/OPTICA.3.001460 [9] SHEN Y CH, HARRIS N C, SKIRLO S, et al. Deep learning with coherent nanophotonic circuits[J]. Nature Photonics, 2017, 11(7): 441-446. doi: 10.1038/nphoton.2017.93 [10] ZHANG T, WANG J, LIU Q, et al. Efficient spectrum prediction and inverse design for plasmonic waveguide systems based on artificial neural networks[J]. Photonics Research, 2019, 7(3): 368-380. doi: 10.1364/PRJ.7.000368 [11] BAGHERIAN H, SKIRLO S, SHEN Y CH, et al. On-chip optical convolutional neural networks[J]. arXiv:, 1808, 03303: 2018. [12] QU Y R, ZHU H ZH, SHEN Y CH, et al. Inverse design of an integrated-nanophotonics optical neural network[J]. Science Bulletin, 2020, 65(14): 1177-1183. doi: 10.1016/j.scib.2020.03.042 [13] DAN Y H, FAN Z Y, SUN X J, et al. All-type optical logic gates using plasmonic coding metamaterials and multi-objective optimization[J]. Optics Express, 2022, 30(7): 11633-11646. doi: 10.1364/OE.449280 [14] ZHANG CH, YANG Z CH, HE X D, et al. Multimodal intelligence: representation learning, information fusion, and applications[J]. IEEE Journal of Selected Topics in Signal Processing, 2020, 14(3): 478-493. doi: 10.1109/JSTSP.2020.2987728 [15] HUANG Y, DU CH ZH, XUE Z H, et al.. What makes multi-modal learning better than single (provably)[C]. 35th Conference on Neural Information Processing Systems, NeurIPS, 2021: 10944-10956. [16] PENG X K, WEI Y K, DENG A D, et al.. Balanced multimodal learning via on-the-fly gradient modulation[C]. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, IEEE, 2022: 8228-8237. [17] RAMESH A, PAVLOV M, GOH G, et al.. Zero-shot text-to-image generation[C]. Proceedings of the 38th International Conference on Machine Learning, ICML, 2021: 8821-8831. [18] NAGRANI A, YANG SH, ARNAB A, et al.. Attention bottlenecks for multimodal fusion[C]. 35th Conference on Neural Information Processing Systems, NeurIPS, 2021: 14200-14213. [19] TROSTEN D J, LØKSE S, JENSSEN R, et al.. Reconsidering representation alignment for multi-view clustering[C]. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, IEEE, 2021: 1255-1265. [20] JIA CH, YANG Y F, XIA Y, et al.. Scaling up visual and vision-language representation learning with noisy text supervision[C]. Proceedings of the 38th International Conference on Machine Learning, ICML, 2021: 4904-4916. [21] ANASTASOPOULOS A, KUMAR S, LIAO H. Neural language modeling with visual features[J]. arXiv:, 1903, 02930: 2019. [22] VIELZEUF V, LECHERVY A, PATEUX S, et al.. Centralnet: a multilayer approach for multimodal fusion[C]. Proceedings of the European Conference on Computer Vision, Munich, 2019: 575-589. [23] ZHANG H, GU M, JIANG X D, et al. An optical neural chip for implementing complex-valued neural network[J]. Nature Communications, 2021, 12(1): 457. doi: 10.1038/s41467-020-20719-7 [24] WOO S, PARK J, LEE J Y, et al.. CBAM: convolutional block attention module[C]. Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, 2018: 3-19. [25] LIN X, RIVENSON Y, YARDIMCI N T, et al. All-optical machine learning using diffractive deep neural networks[J]. Science, 2018, 361(6406): 1004-1008. doi: 10.1126/science.aat8084 [26] WU Q H, SUI X B, FEI Y H, et al. Multi-layer optical Fourier neural network based on the convolution theorem[J]. AIP Advances, 2021, 11(5): 055012. doi: 10.1063/5.0055446 [27] FELDMANN J, YOUNGBLOOD N, KARPOV M, et al. Parallel convolutional processing using an integrated photonic tensor core[J]. Nature, 2021, 589(7840): 52-58. doi: 10.1038/s41586-020-03070-1 [28] ZHANG D N, ZHANG Y J, ZHANG Y, et al. Training and inference of optical neural networks with noise and low-bits control[J]. Applied Sciences, 2021, 11(8): 3692. doi: 10.3390/app11083692 [29] KRIEGESKORTE N. Deep neural networks: a new framework for modeling biological vision and brain information processing[J]. Annual Review of Vision Science, 2015, 1: 417-446. doi: 10.1146/annurev-vision-082114-035447 [30] GENG Y, HAN Z B, ZHANG CH Q, et al.. Uncertainty-aware multi-view representation learning[C]. Proceedings of the AAAI Conference on Artificial Intelligence, 2021: 7545-7553. [31] JIA X D, JING X Y, ZHU X K, et al. Semi-supervised multi-view deep discriminant representation learning[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021, 43(7): 2496-2509. doi: 10.1109/TPAMI.2020.2973634 [32] HAN Z B, ZHANG CH Q, FU H ZH, et al. Trusted multi-view classification with dynamic evidential fusion[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023, 45(2): 2551-2566. doi: 10.1109/TPAMI.2022.3171983 [33] SHAO R, ZHANG G, GONG X. Generalized robust training scheme using genetic algorithm for optical neural networks with imprecise components[J]. Photonics Research, 2022, 10(8): 1868-1876. doi: 10.1364/PRJ.449570 -

下载:

下载: