-

摘要:

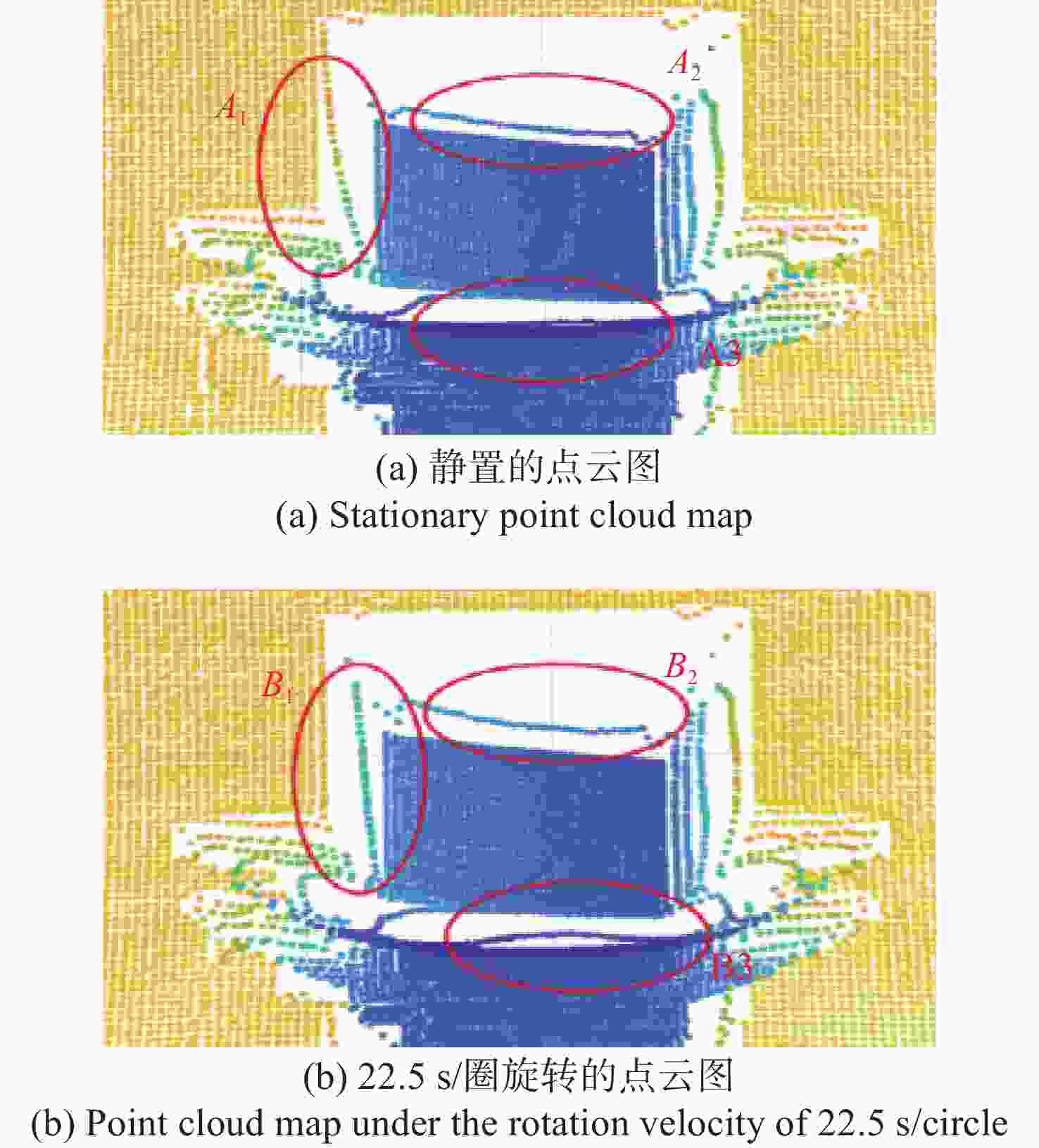

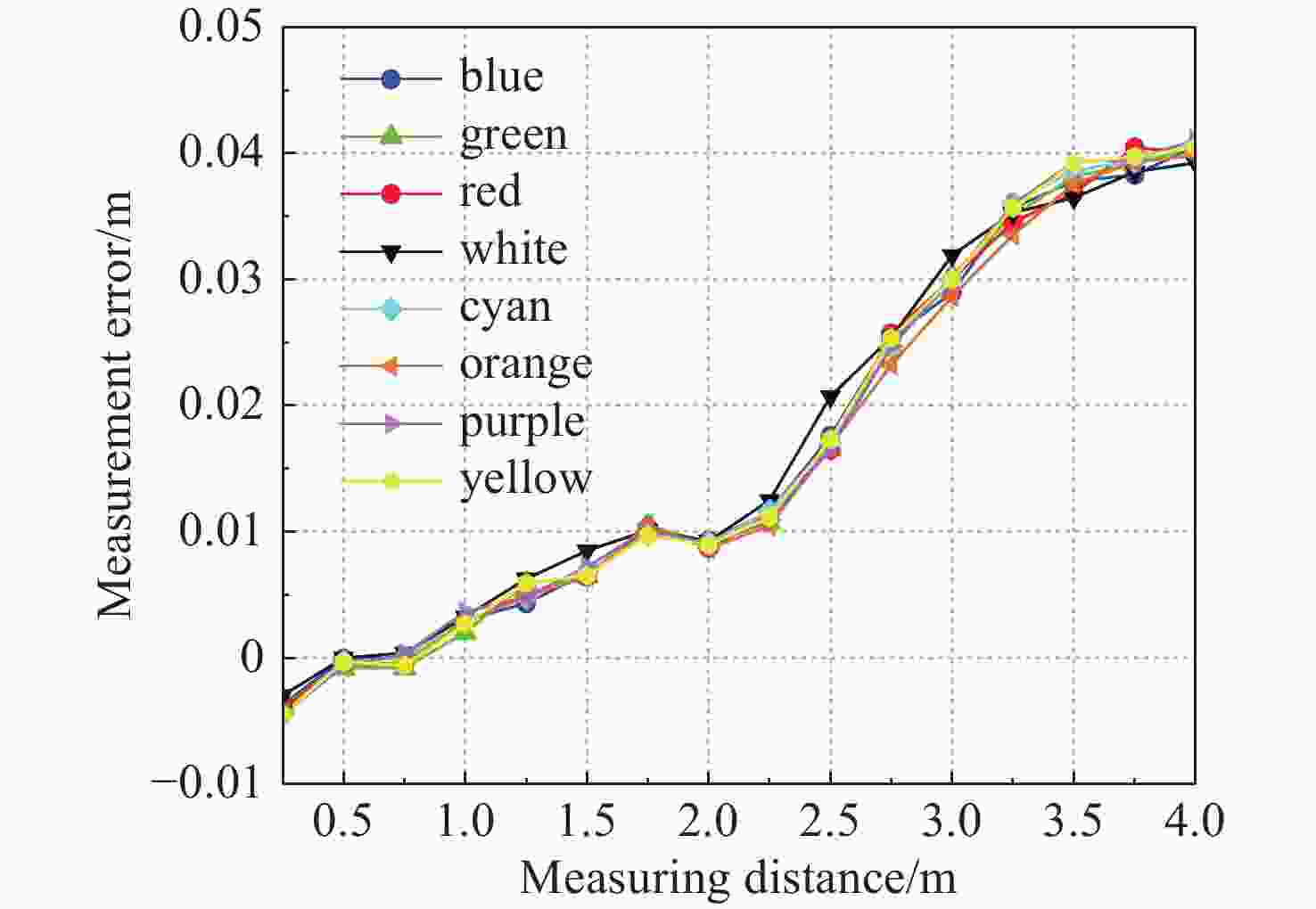

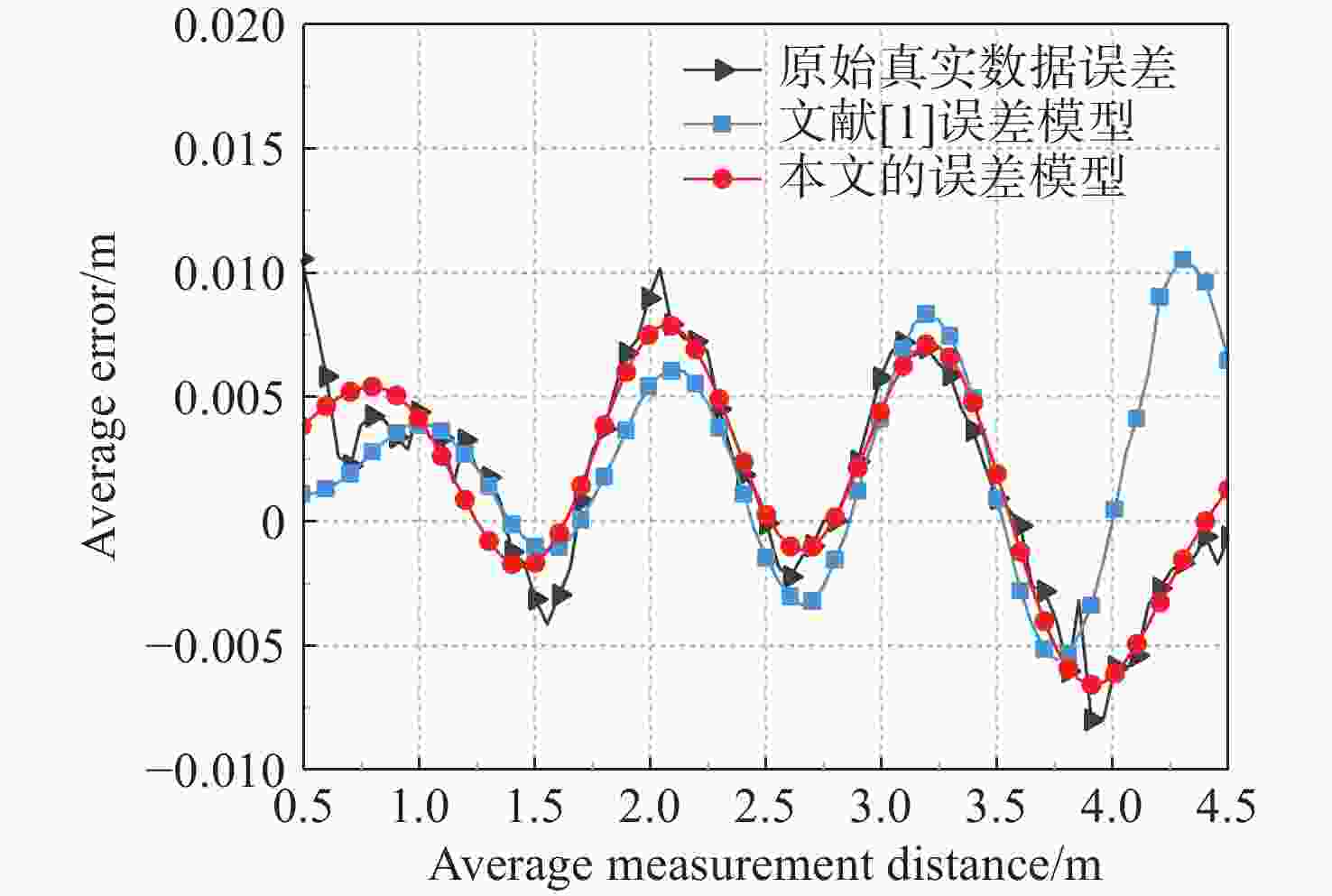

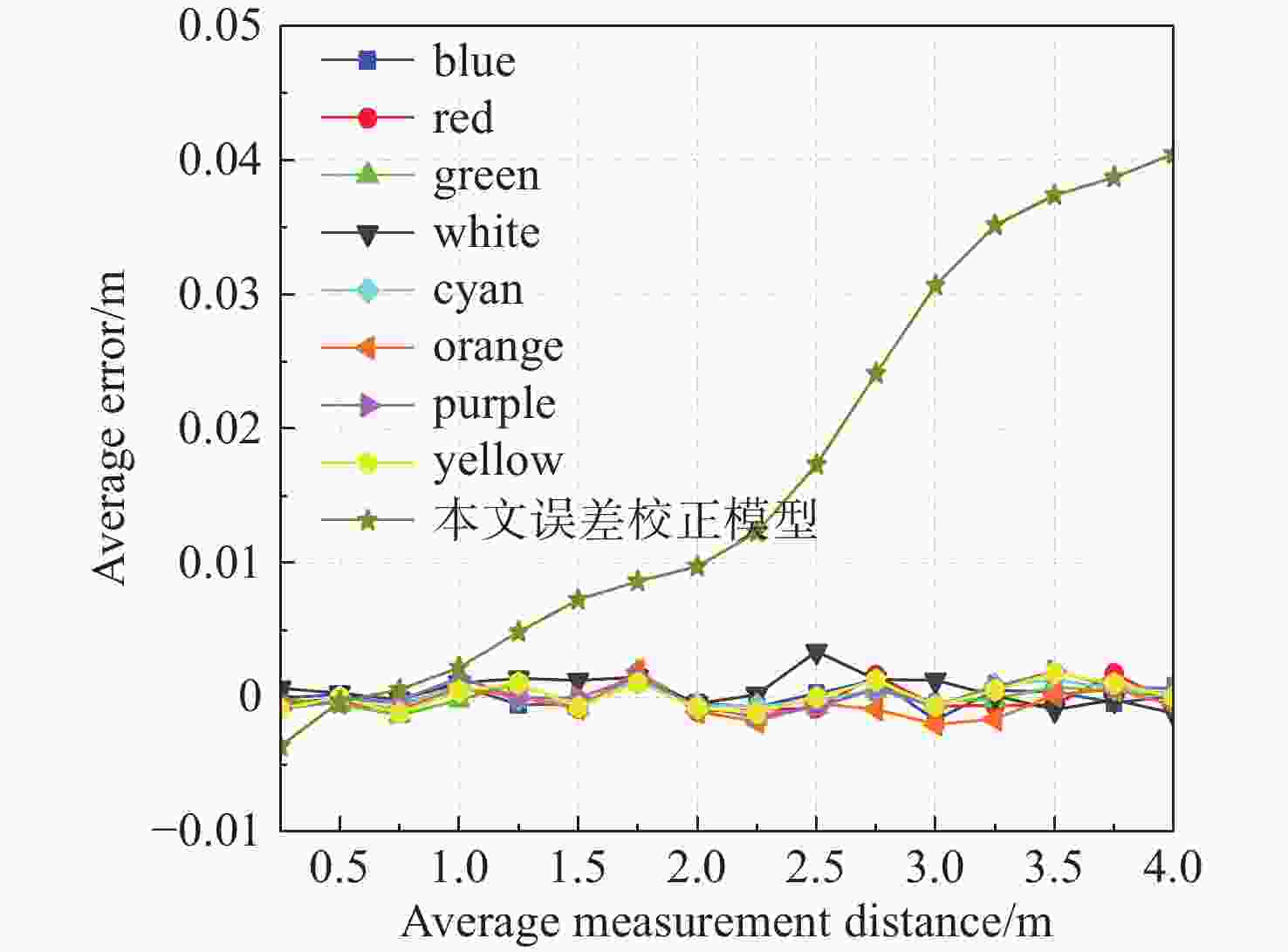

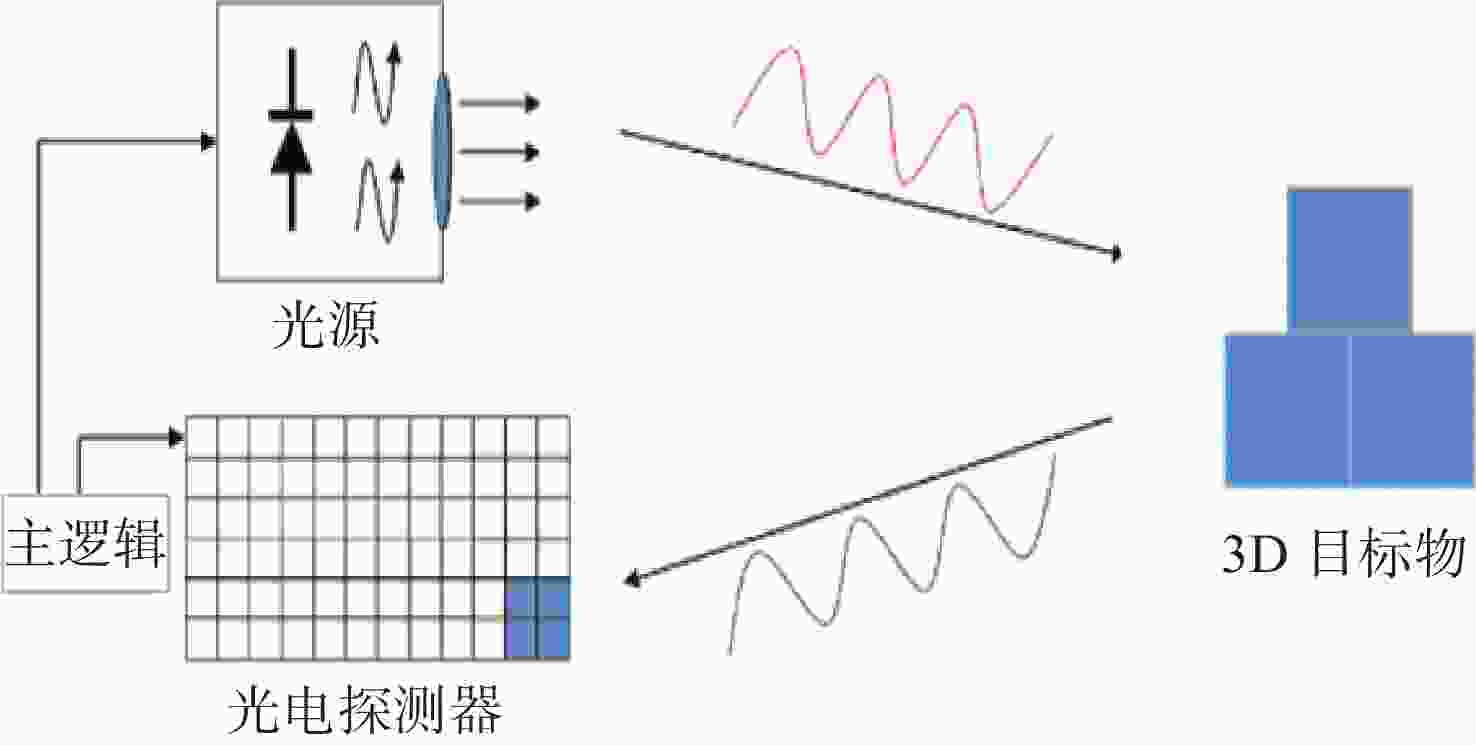

ToF (Time of Flight)深度相机是获取三维点云数据的重要手段之一,但ToF深度相机受到自身硬件和外部环境的限制,其测量数据存在一定的误差。本文针对ToF深度相机的非系统误差进行研究,通过实验验证了被测目标的颜色、距离和相对运动等因素均会对深度相机获取的数据产生影响,且影响均不相同。本文提出了一种新的测量误差模型对颜色和距离产生的误差进行校正,对于相对运动产生的误差,建立了三维运动模糊函数进行恢复,通过对所建立的校正模型进行数值分析,距离和颜色的残余误差小于4 mm,相对运动所带来的误差小于0.7 mm。本文所做工作改善了ToF深度相机的测量数据的质量,为开展三维点云重建等工作提供了更精准的数据支持。

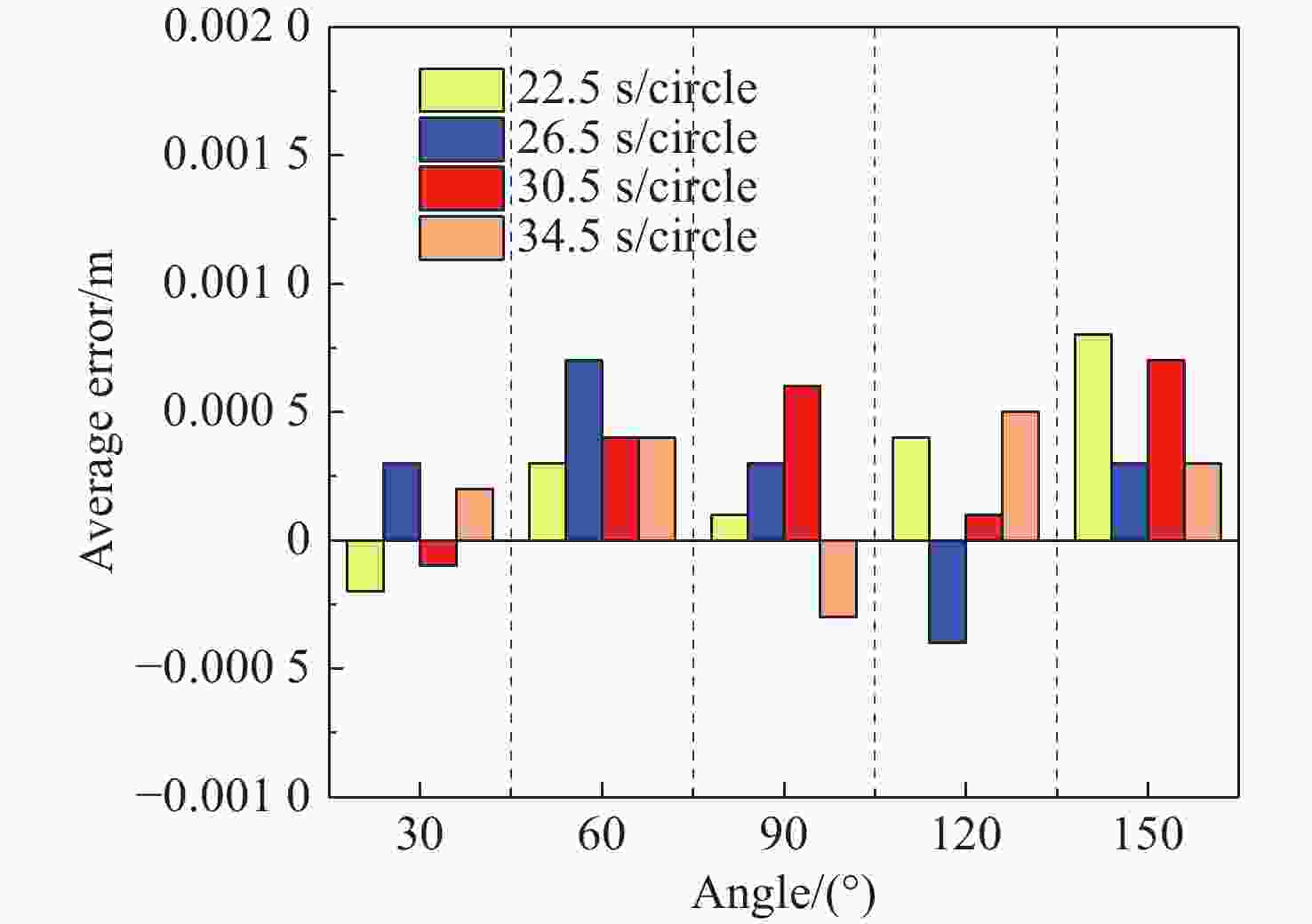

Abstract:Time of Flight (ToF) depth camera is one of the important means to obtain three-dimensional point cloud data, but ToF depth camera is limited by its own hardware and external environment, and its measurement data has certain errors. Aiming at the unsystematic error of ToF depth camera, this paper experimentally verifies that the color, distance, and relative motion of the measured target affect the data obtained by the depth camera, and the error effects are different. A new measurement error model is proposed to correct the error caused by color and distance. For the error caused by relative motion, a three-dimensional motion blur function is established to recover it. Through the numerical analysis of the established calibration model, the residual error of distance and color is less than 4 mm, and the error caused by relative motion is less than 0.7 mm. The work done in this paper improves the quality of the measurement data of the ToF depth camera, and provides more accurate data support for 3D point cloud reconstruction and other work.

-

Key words:

- ToF depth camera /

- depth error /

- error correction

-

图 7 不同测量距离下的平均误差及文献[1]所提模型误差和本文所提模型误差

Figure 7. Average depth error of error models proposed by Ref. 1 and this paper at different average measurement distances

表 1 本文模型参数

Table 1. Parameters of proposed model

参数名 参数值 参数名 参数值 ${a_0}$ 0.001684 w 1.464 ${a_1}$ −0.002211 ${b_1}$ 0.0007332 ${a_2}$ −0.001091 ${b_2}$ 0.002141 ${a_3}$ −0.002439 ${b_3}$ 0.002785 ${a_4}$ 0.002291 ${b_4}$ −0.0004192 -

[1] CHIABRANDO F, CHIABRANDO R, PIATTI D, et al. Sensors for 3D imaging: metric evaluation and calibration of a CCD/CMOS time-of-flight camera[J]. Sensors, 2009, 9(12): 10080-10096. doi: 10.3390/s91210080 [2] CATTINI S, CASSANELLI D, DI LORO G, et al. Analysis, quantification, and discussion of the approximations introduced by pulsed 3-D LiDARs[J]. IEEE Transactions on Instrumentation and Measurement, 2021, 70: 7007311. [3] JUNG S, LEE Y S, LEE Y, et al. 3D reconstruction using 3D registration-based ToF-stereo fusion[J]. Sensors, 2022, 22(21): 8369. doi: 10.3390/s22218369 [4] LI Y F, GAO J, WANG X X, et al. Depth camera based remote three-dimensional reconstruction using incremental point cloud compression[J]. Computers and Electrical Engineering, 2022, 99: 107767. doi: 10.1016/j.compeleceng.2022.107767 [5] WANG X Q, SONG P, ZHANG W Y, et al. A systematic non-uniformity correction method for correlation-based ToF imaging[J]. Optics Express, 2022, 30(2): 1907-1924. doi: 10.1364/OE.448029 [6] 王明星, 郑福, 王艳秋, 等. 基于置信度的飞行时间点云去噪方法[J]. 红外技术,2022,44(5):513-520. doi: 10.11846/j.issn.1001-8891.2022.5.hwjs202205010WANG M X, ZHENG F, WANG Y Q, et al. Time-of-flight point cloud denoising method based on confidence level[J]. Infrared Technology, 2022, 44(5): 513-520. (in Chinese) doi: 10.11846/j.issn.1001-8891.2022.5.hwjs202205010 [7] KEPSKI M, KWOLEK B. Fall detection using ceiling-mounted 3D depth camera[C]. International Conference on Computer Vision Theory and Applications, IEEE, 2014: 640-647. [8] LEE S, KIM J, LIM H, et al. Surface reflectance estimation and segmentation from single depth image of ToF camera[J]. Signal Processing:Image Communication, 2016, 47: 452-462. doi: 10.1016/j.image.2016.07.006 [9] CHIABRANDO F, PIATTI D, RINAUDO F. SR-4000 ToF camera: further experimental tests and first applications to metric surveys[J]. Remote Sensing and Spatial Information Sciences, 2010, 38: 149-154. [10] JIMENEZ D, PIZARRO D, MAZO M. Single frame correction of motion artifacts in PMD-based time of flight cameras[J]. Image and Vision Computing, 2014, 32(12): 1127-1143. doi: 10.1016/j.imavis.2014.08.014 [11] AHMED F, CONDE M H, MARTÍNEZ P L, et al. Pseudo-passive time-of-flight imaging: simultaneous illumination, communication, and 3D sensing[J]. IEEE Sensors Journal, 2022, 22(21): 21218-21231. doi: 10.1109/JSEN.2022.3208085 [12] HASSAN M, EBERHARDT J, MALORODOV S, et al. Robust Multiview 3D pose estimation using time of flight cameras[J]. IEEE Sensors Journal, 2022, 22(3): 2672-2684. doi: 10.1109/JSEN.2021.3133108 [13] WEYER C A, BAE K H, LIM K, et al. Extensive metric performance evaluation of a 3D range camera[J]. The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences Sens, 2008, 37: 939-944. [14] MURE-DUBOIS J, HÜGLI H. Real-time scattering compensation for time-of-flight camera[C]. 5th International Conference on Computer Vision Systems (ICVS), ICVS, 2007: 1-12. [15] 李胜荣. 动态模糊图像复原研究[J]. 信息与电脑,2021,33(9):46-49. doi: 10.3969/j.issn.1003-9767.2021.09.015LI SH R. Study of dynamic MODULUS and image restoration[J]. China Computer & Communication, 2021, 33(9): 46-49. (in Chinese) doi: 10.3969/j.issn.1003-9767.2021.09.015 -

下载:

下载: