-

摘要:

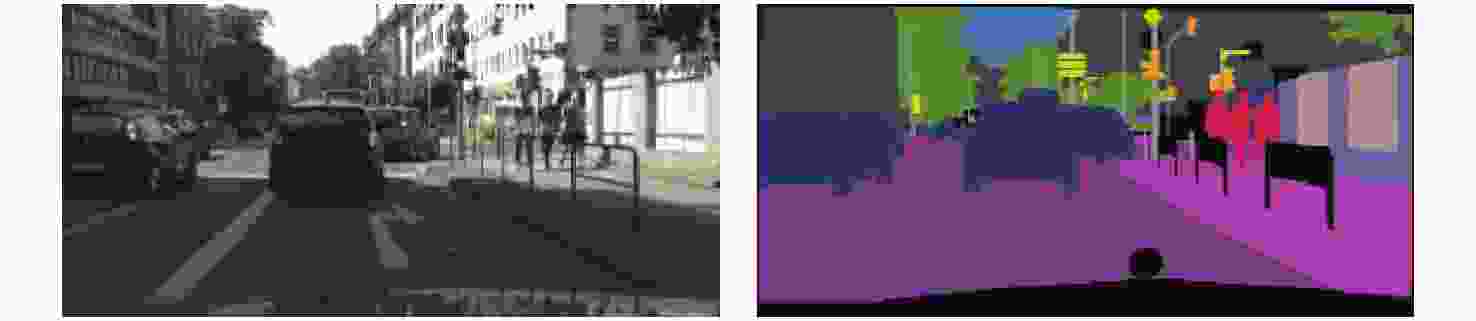

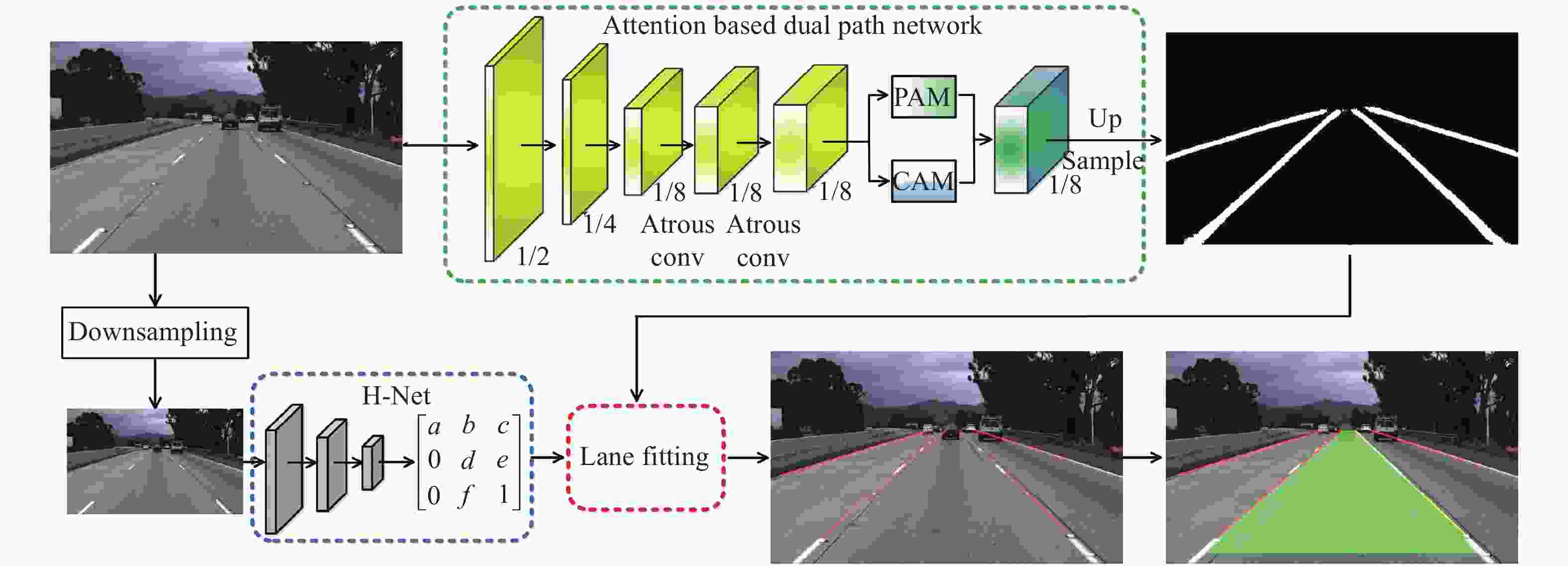

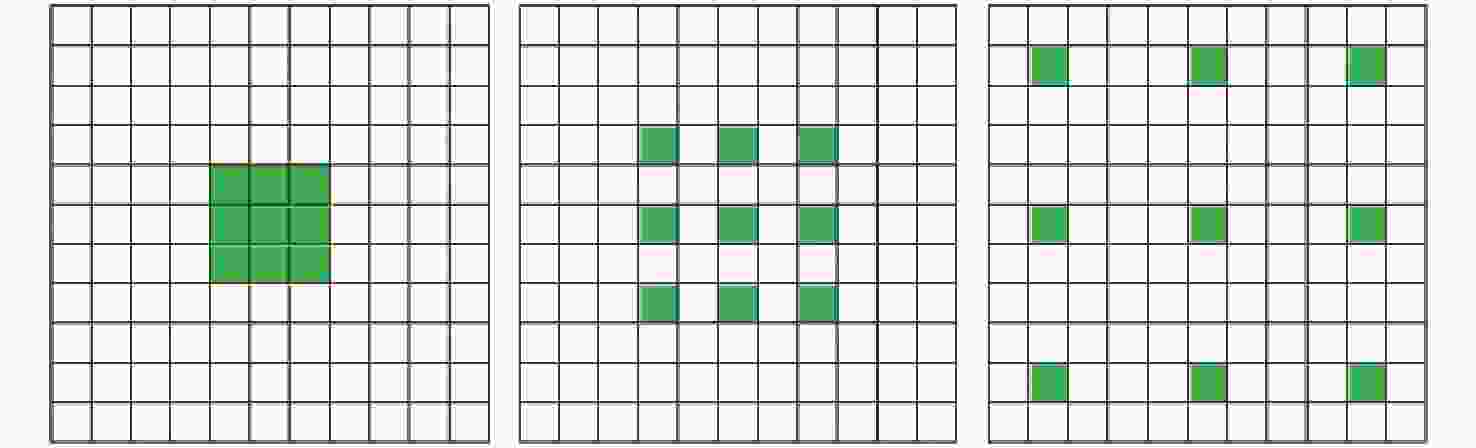

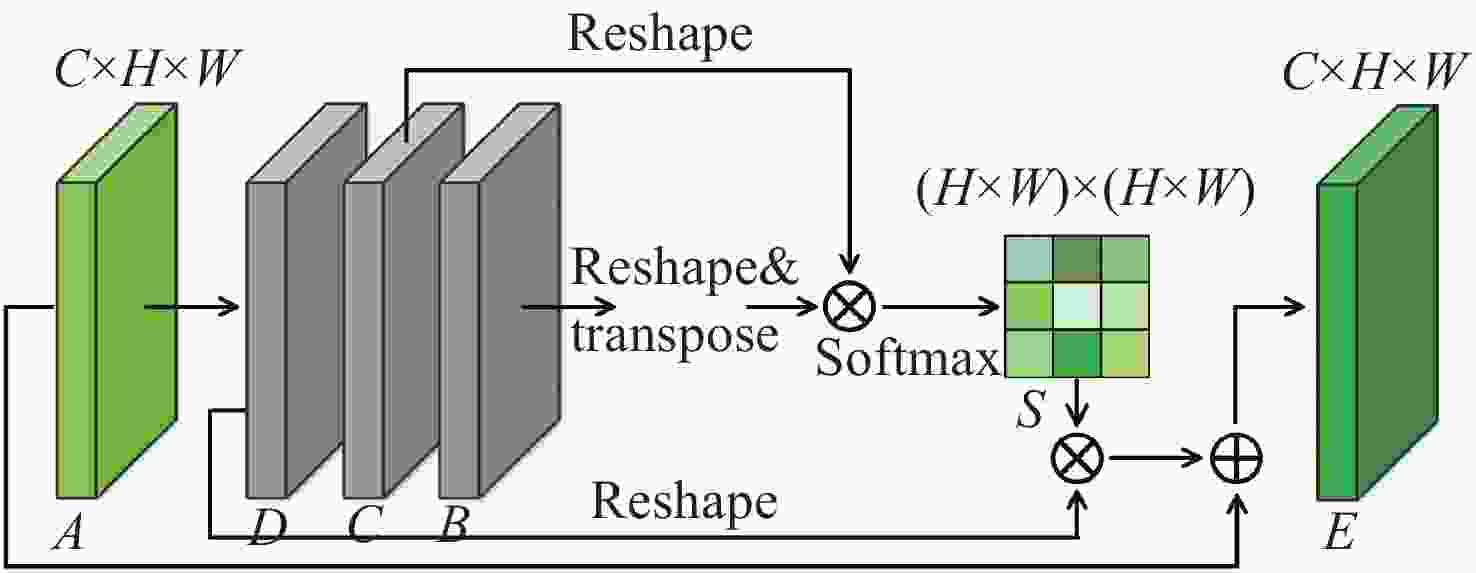

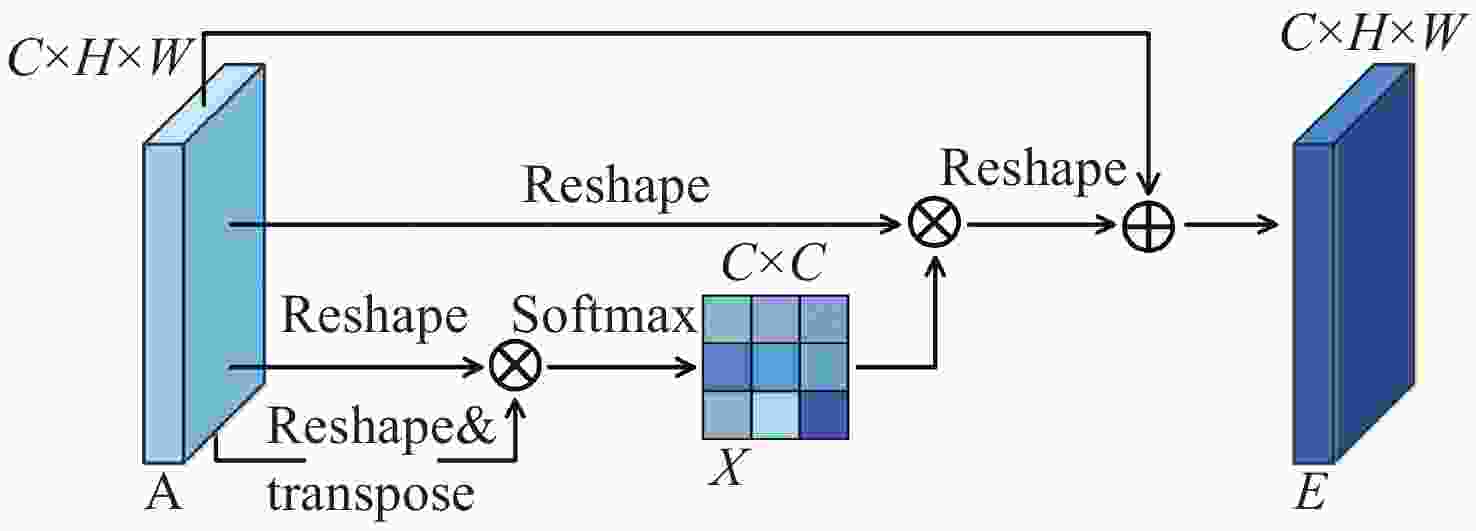

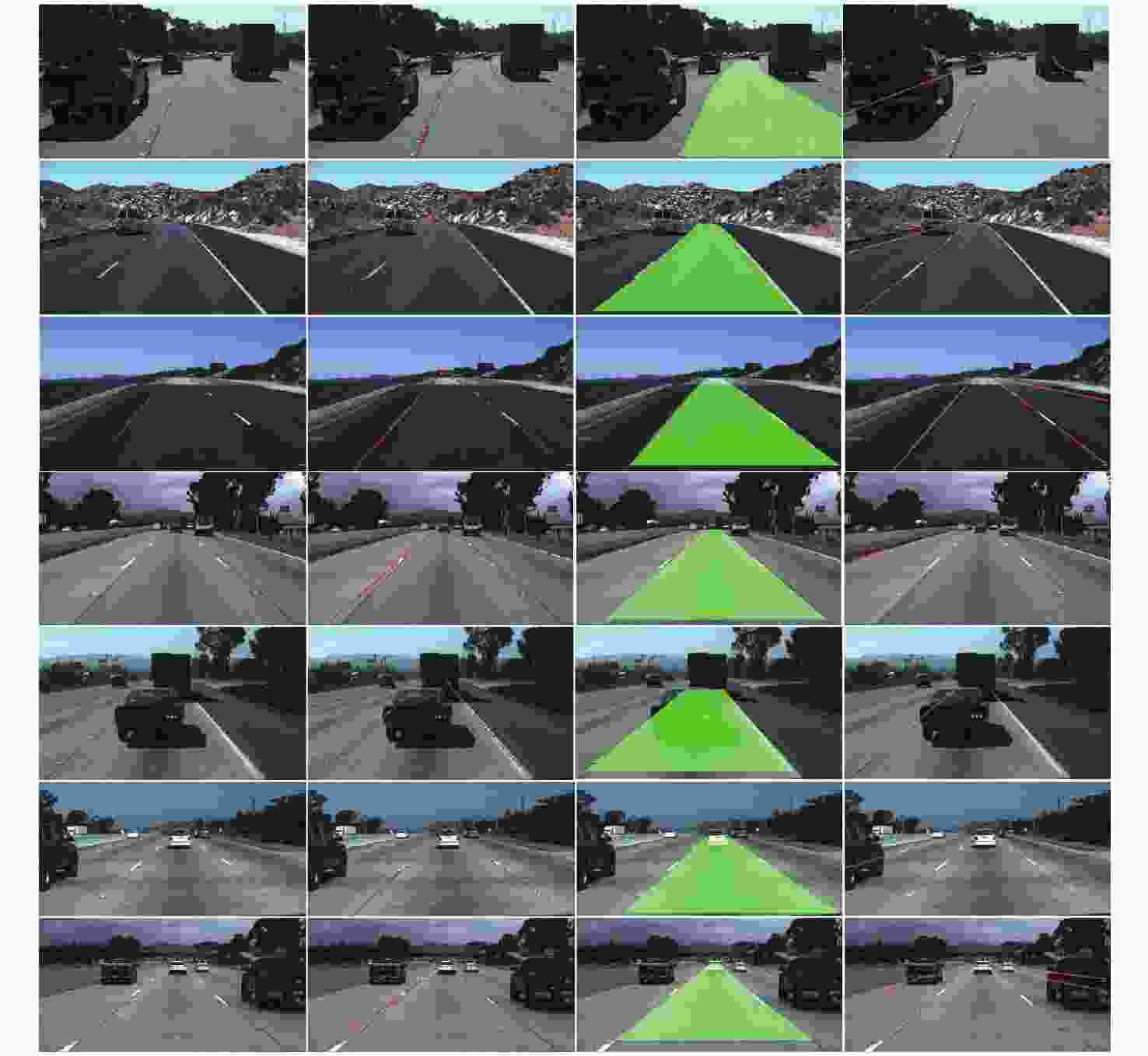

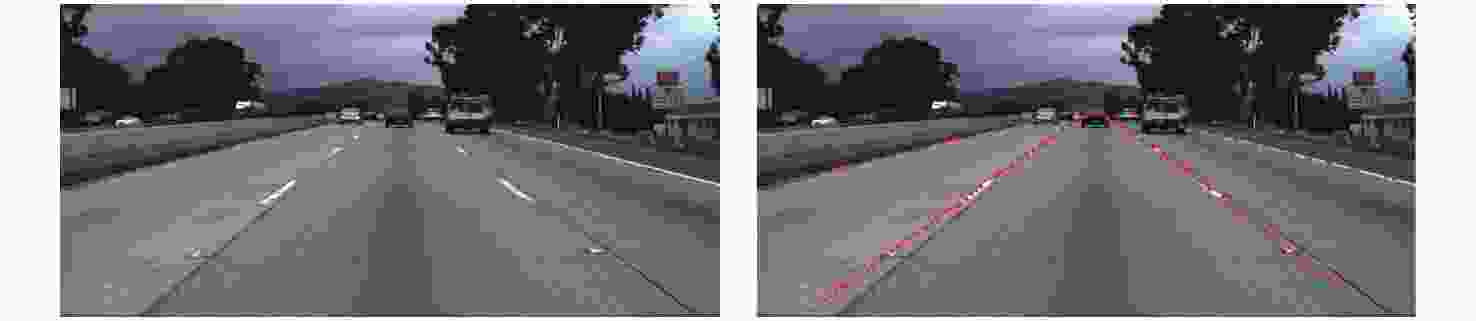

为了提升车道线检测算法在障碍物遮挡等复杂情况下的检测性能,本文提出了一种基于双注意力机制的多车道线检测算法。首先,本文通过设计基于空间和通道双注意力机制的车道线语义分割网络,得到分别代表车道线像素和背景区域的二值分割结果;然后,引入HNet网络结构,使用其输出的透视变换矩阵将分割图转换为鸟瞰视图,继而进行曲线拟合并逆变换回原图像空间,实现多车道线的检测;最后,将图像中线两侧车道线所包围的区域定义为目前行驶的行车车道。本文算法在Tusimple数据集凭借134 frame/s的实时性表现达到了96.63%的准确率,在CULane数据集取得了77.32%的精确率。实验结果表明,本文算法可以针对包括障碍物遮挡等不同场景下的多条车道线及行车车道进行实时检测,其性能相比较现有算法得到了显著的提升。

Abstract:In order to improve the performance of lane detection algorithms under complex scenes like obstacles, we proposed a multi-lane detection method based on dual attention mechanism. Firstly, we designed a lane segmentation network based on a spatial and channel attention mechanism. With this, we obtained a binary image which shows lane pixels and the background region. Then, we introduced HNet which can output a perspective transformation matrix and transform the image to a bird’s eye view. Next, we did curve fitting and transformed the result back to the original image. Finally, we defined the region between the two-lane lines near the middle of the image as the ego lane. Our algorithm achieves a 96.63% accuracy with real-time performance of 134 FPS on the Tusimple dataset. In addition, it obtains 77.32% of precision on the CULane dataset. The experiments show that our proposed lane detection algorithm can detect multi-lane lines under different scenarios including obstacles. Our proposed algorithm shows more excellent performance compared with the other traditional lane line detection algorithms.

-

Key words:

- lane detection /

- semantic segmentation /

- attention mechanism /

- lane fitting

-

表 1 本文算法在Tusimple数据集定量实验结果

Table 1. Quantitative experiment results of proposed algorithm on Tusimple

表 2 CULane数据集定量实验结果

Table 2. Quantitative experiment results of proposed algorithm on CULane

Method Normal Crowd Dazzle Shadow Noline SCNN[18] 90.60 69.70 58.50 66.90 43.40 FastDraw[20] 85.90 63.60 57.00 69.90 40.60 UFSD-18[1] 87.70 66.00 58.40 62.80 40.20 UFSD-34[1] 90.70 70.20 59.50 69.30 44.40 LaneATT[22] 91.17 72.71 65.82 68.03 49.13 Ours 91.21 76.33 69.51 73.25 50.16 Method Arrow Curve Cross Night Total SCNN[18] 84.10 64.40 1990 66.10 71.60 FastDraw[20] 79.40 65.20 7013 57.80 - UFSD-18[1] 81.00 57.90 1743 62.10 68.40 UFSD-34[1] 85.70 69.50 2037 66.70 72.30 LaneATT[22] 87.82 63.75 1020 68.58 75.13 Ours 88.72 71.25 1265 70.73 77.32 -

[1] QIN Z Q, WANG H Y, LI X. Ultra fast structure-aware deep lane detection[C]. Proceedings of the 16th European Conference on Computer Vision, Springer, 2020: 276-291. [2] 陈晓冬, 艾大航, 张佳琛, 等. Gabor滤波融合卷积神经网络的路面裂缝检测方法[J]. 中国光学,2020,13(6):1293-1301. doi: 10.37188/CO.2020-0041CHEN X D, AI D H, ZHANG J CH, et al. Gabor filter fusion network for pavement crack detection[J]. Chinese Optics, 2020, 13(6): 1293-1301. (in Chinese) doi: 10.37188/CO.2020-0041 [3] 任凤雷, 何昕, 魏仲慧, 等. 基于DeepLabV3+与超像素优化的语义分割[J]. 光学 精密工程,2019,27(12):2722-2729. doi: 10.3788/OPE.20192712.2722REN F L, HE X, WEI ZH H, et al. Semantic segmentation based on DeepLabV3+ and superpixel optimization[J]. Optics and Precision Engineering, 2019, 27(12): 2722-2729. (in Chinese) doi: 10.3788/OPE.20192712.2722 [4] YU ZH P, REN X ZH, HUANG Y Y, et al. . Detecting lane and road markings at a distance with perspective transformer layers[C]. Proceedings of the 23rd International Conference on Intelligent Transportation Systems, IEEE, 2020: 1-6. [5] CHIU K Y, LIN S F. Lane detection using color-based segmentation[C]. Proceedings of the IEEE Intelligent Vehicles Symposium, IEEE, 2005: 706-711. [6] HUR J, KANG S N, SEO S W. Multi-lane detection in urban driving environments using conditional random fields[C]. Proceedings of 2013 IEEE Intelligent Vehicles Symposium (IV), IEEE, 2013: 1297-1302. [7] JUNG H, MIN J, KIM J. An efficient lane detection algorithm for lane departure detection[C]. Proceedings of 2013 IEEE Intelligent Vehicles Symposium (IV), IEEE, 2013: 976-981. [8] BORKAR A, HAYES M, SMITH M T. A novel lane detection system with efficient ground truth generation[J]. IEEE Transactions on Intelligent Transportation Systems, 2012, 13(1): 365-374. doi: 10.1109/TITS.2011.2173196 [9] VAN GANSBEKE W, DE BRABANDERE B, NEVEN D, et al. . End-to-end lane detection through differentiable least-squares fitting[C]. Proceedings of 2019 IEEE/CVF International Conference on Computer Vision Workshop, IEEE, 2019: 905-913. [10] LIU T, CHEN ZH W, YANG Y, et al. . Lane detection in low-light conditions using an efficient data enhancement: light conditions style transfer[C]. Proceedings of 2020 IEEE Intelligent Vehicles Symposium, IEEE, 2020: 1394-1399. [11] CHANG D, CHIRAKKAL V, GOSWAMI S, et al. . Multi-lane detection using instance segmentation and attentive voting[C]. Proceedings of the 19th International Conference on Control, Automation and Systems, IEEE, 2020: 1538-1542. [12] KIM J, LEE M. Robust lane detection based on convolutional neural network and random sample consensus[C]. Proceedings of the 21st International Conference on Neural Information Processing, Springer, 2014: 454-461. [13] NEVEN D, DE BRABANDERE B, GEORGOULIS S, et al. . Towards end-to-end lane detection: an instance segmentation approach[C]. Proceedings of 2018 IEEE intelligent vehicles symposium (IV), IEEE, 2018: 286-291. [14] LEE H, SOHN K, MIN D. Unsupervised low-light image enhancement using bright channel prior[J]. IEEE Signal Processing Letters, 2020, 27: 251-255. doi: 10.1109/LSP.2020.2965824 [15] YOO S, LEE H S, MYEONG H, et al. . End-to-end lane marker detection via row-wise classification[C]. Proceedings of 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, IEEE, 2020: 4335-4343. [16] FU J, LIU J, TIAN H J, et al. . Dual attention network for scene segmentation[C]. Proceedings of 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, 2019: 3141-3149. [17] HE K M, ZHANG X Y, REN SH Q, et al. . Deep residual learning for image recognition[C]. Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition, IEEE, 2016: 770-778. [18] PAN X G, SHI J P, LUO P, et al. . Spatial as deep: spatial CNN for traffic scene understanding[C]. Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence and Thirtieth Innovative Applications of Artificial Intelligence Conference and Eighth AAAI Symposium on Educational Advances in Artificial Intelligence, AAAI Press, 2018: 7276-7283. [19] CHEN ZH P, LIU Q F, LIAN CH F. PointLaneNet: efficient end-to-end CNNs for accurate real-time lane detection[C]. Proceedings of 2019 IEEE Intelligent Vehicles Symposium (IV), IEEE, 2019: 2563-2568. [20] PHILION J. FastDraw: addressing the long tail of lane detection by adapting a sequential prediction network[C]. Proceedings of 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, IEEE, 2019: 11574-11583. [21] YOO S, LEE H S, MYEONG H, et al. . End-to-end lane marker detection via row-wise classification[C]. Proceedings of 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, IEEE, 2020: 4335-4343. [22] TABELINI L, BERRIEL R, PAIXÃO T M, et al. . Keep your eyes on the lane: Real-time attention-guided lane detection[C]. Proceedings of 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, IEEE, 2021: 294-302. [23] 陈晓冬, 盛婧, 杨晋, 等. 多参数Gabor预处理融合多尺度局部水平集的超声图像分割[J]. 中国光学,2020,13(5):1075-1084. doi: 10.37188/CO.2020-0025CHEN X D, SHENG J, YANG J, et al. Ultrasound image segmentation based on a multi-parameter Gabor filter and multiscale local level set method[J]. Chinese Optics, 2020, 13(5): 1075-1084. (in Chinese) doi: 10.37188/CO.2020-0025 [24] 周文舟, 范晨, 胡小平, 等. 多尺度奇异值分解的偏振图像融合去雾算法与实验[J]. 中国光学,2021,14(2):298-306. doi: 10.37188/CO.2020-0099ZHOU W ZH, FAN CH, HU X P, et al. Multi-scale singular value decomposition polarization image fusion defogging algorithm and experiment[J]. Chinese Optics, 2021, 14(2): 298-306. (in Chinese) doi: 10.37188/CO.2020-0099 -

下载:

下载: